How Hybrid Speech-to-Text Model Works: A Clear and simple Breakdown

Introduction

While exploring deep learning resources, I discovered something interesting: Speech-to-Text technology. This technology is part of Automatic Speech Recognition (ASR) and Natural Language Processing (NLP). I like to share some useful information about what it is and how does it work?

What is STT?

STT technology simply turns spoken language into written text using advance machine learning models.

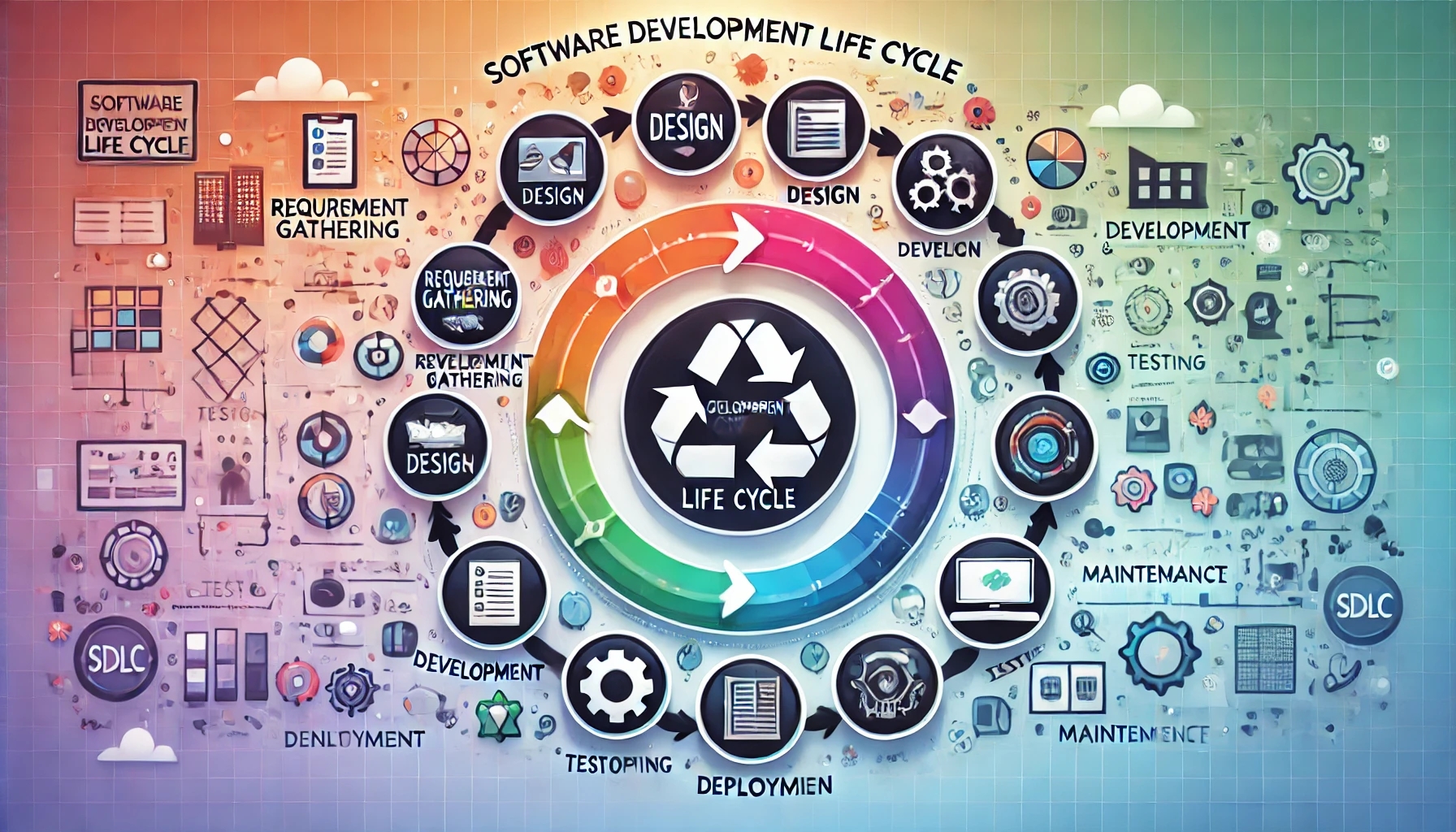

Workflow Diagram

Here is a simple dynamic diagram that will help you to understand the workfow:

Step by Step breakdown of the Workflow

1. Capturing Speech Input:

It starts with the user interface. A microphone picks up audio as a waveform.

This waveform is unique to each speaker. It includes noise, distortions, and other features. Captured audio first goes through a process called analog-to-digital conversion. This changes it into a digital signal. This prepares the audio for further processing.

2. Audio Signal Processing:

Before the audio can be fed into a model, it must be cleaned and structured. Signal processing applies several transformations:

- Noise Reduction: Filtering algorithms reduce ambient noise and enhance the speaker's voice.

- Framing: The audio signal is split into smaller frames, usually lasting 20 to 40 milliseconds. This helps the model process the data more easily.

- Windowing and Overlapping: Overlapping windows smoothen transitions between frames to avoid abrupt changes in analysis, providing continuity for the model.

3. Feature Extraction:

Once processed, the model performs feature extraction, translating audio into a format that machines understand. Techniques like Mel-Frequency Cepstral Coefficients (MFCC) and Spectrograms help turn audio waveforms into visual images. These images show frequency and amplitude. The features extracted often include:

- Frequency: Determines pitch changes.

- Energy: Captures volume or loudness.

- Temporal Patterns: Maps out rhythm and emphasis in speech.

4. Acoustic Model:

The acoustic model is usually a deep learning model. It can be a Convolutional Neural Network (CNN) or a Recurrent Neural Network (RNN). This model processes features to identify phonemes, which are the smallest sound units. In a hybrid STT model, acoustic models mix traditional Hidden Markov Models (HMM) with neural networks. This combination improves phoneme prediction accuracy and processing efficiency. The model produces probability scores for each phoneme in the context of the language.

5. Phoneme Mapping:

The system then maps phonemes to actual words. This step uses a dictionary or lexicon to convert recognized phonemes into valid word candidates. Hybrid models utilize both rule-based approaches and statistical mapping to increase word prediction accuracy. The system also applies context-based constraints, eliminating improbable word combinations.

6. Language Model:

The language model (LM) refines the output by adding semantic and syntactic context. Utilizing N-grams, Recurrent Neural Networks (RNNs), or Transformer models, the LM considers sentence structure, grammar, and context, enabling it to resolve homophones and contextual word choices (e.g., “their” vs. “there”). This step is critical for applications like real-time translation and voice-activated commands, where context ensures coherence.

7. Decoding:

During decoding, the model combines outputs from the acoustic and language models. The model uses algorithms like the Viterbi algorithm or beam search. These help it find the best word sequences to create a clear sentence. Decoding is optimized to minimize latency, especially in real-time applications where low delay is essential.

8. Text Output & Error Correction:

After decoding, the raw text output may still contain minor errors. An error correction model or post-processing algorithm performs spell-checking, grammar correction, and contextual adjustments. This phase may use training data on common mistakes. This includes things like speaker accents and phrases that are often misheard. The goal is to improve the transcription.

9. Final Text Output:

The final output is the fully transcribed, error corrected text. This text can now be used in downstream applications, whether for subtitles, virtual assistant responses, or voice commands.

Vital Role of Speech-to-Text (STT) in Daily Life

1. Inclusion and Accessibility:

STT makes the usage of technology easy for people of all kinds, whether disabled or not. With their auditory, visual, or physical limitation, one may now rely on speech rather than typing or reading with STT. It brings more ways to communicate, navigate, and connect devices to the internet. This makes the digital world accessible to everyone.

2. Hands-Free Convenience and Efficiency:

Living busy lives, STT enables us to multitask even more. For instance, if you drive, cook, or work while doing so, STT lets you do most of those tasks with your voice instead of typing out what you want. You can send messages, get directions, or even take notes simply by speaking, making life easier and quicker.

3. Productivity at Work and Automation:

STT helps people in workplaces by converting conversations or meetings into text. This saves the individuals from having to jot down everything. Later, the text can be saved, edited, or shared. This helps a great deal in maintaining records, meeting notes, and ensuring that all the details are kept safe. STT saves time and keeps information in order, making work life easier and smoother.

Real Life Examples

1. Virtual Assistants:

Virtual assistants have transformed the way humans interact with devices at home or in space. STT enables users to control their environment, ask questions, remind them of things, and play music with their voice. Such hands-free convenience has become indispensable in daily routines since both accessibility and ease of use improve. for example: Alexa, Siri, Google Assistant

2. Healthcare:

STT technology will finally enable doctors and other clinical staff to undertake real-time transcription of patient notes, prescriptions, and reports. In that case, the doctor will be able to attend to the patient rather than typing away at his computer, saving time and reducing the chances of errors in transcriptions for maintaining accurate patient records. Utilizing this approach, solutions such as Dragon Medical One use speech to generate high-quality medical transcription at an unprecedented velocity. 3. Customer Service Automation: Example - IVR Systems

Most companies integrate their IVR with STT systems to understand customer queries. These customers can record their requirements, which will be transcribed by the STT and transferred to the concerned department. This use case minimizes queue time, smoothes service delivery, and improves customer experience with instant responsive support.

4. Real-Time Captioning:

STT is used in education and events for live captioning of lectures, conferences, and webinars to provide information to the deaf and hard-of-hearing and even to non-native speakers. It enables everybody to follow and be attentive to it; thus, creating an inclusive environment.

STT innovations are fast-becoming intrinsic in creating an accessible world, a quick world, and also an interconnected world. As technology continues to advance, this is one piece of technology that's sure to change the way we interact with technology even further, making digital communication more natural and intuitive.

Conclusion

Speech-to-Text (STT) technology is becoming a helpful part of everyday life, making it easier for everyone to interact with devices. From allowing people to control their devices hands-free to making digital content accessible for those with disabilities, STT brings convenience and inclusivity to our routines. As it keeps improving, STT will be even more a part of how we communicate and get things done, making life just a little bit simpler for everyone.

Frequently Asked Questions

About the Author

Hey, I'm Md Shamim Hossen, a Content Writer with a passion for tech, strategy, and clean storytelling. I turn AI and app development into content that resonates and drives real results. When I'm not writing, you'll find me exploring the latest SEO tools, researching, or traveling.

Trendingblogs

Get the best of our content straight to your inbox!

By submitting, you agree to our privacy policy.